![[*]](footnote.png) and J. S. Devitt

and J. S. Devitt![[*]](footnote.png) D Operator

D Operator

Introduction

In this article we would like to inform our readers and users about

the development of the D operator in Maple.

As with many major tasks in system development, getting something like

this nicely integrated into a system, and working correctly,

notationally correct, and making it easy to use, requires the design

of new facilities and changes to many parts of the system.

Although ``differentiation'' is often regarded as a relatively simple task for a computer algebra system, it turns out that this is not actually the case. A paper by Stanly Steinberg and Michael Wester [1] presented at the 1984 Macsyma Users Conference pointed out problems with the differentiation facility in the computer algebra systems available at that time. In particular, Maple and other systems could not distinguish correctly between total and partial derivatives.

Operators were first introduced into Maple in version 4.2

by Gaston Gonnet [2]. The addition of the D operator

addressed the distinction of total and partial derivatives, and also

a representation of the derivative of a function evaluated at a

point (for example, y'(0) by D(y)(0)). Partial derivatives and the ability to

apply the chain rule to an unknown function were added in Maple V.

Presently the D operator is being extended to address the

problem of algorithmic differentiation, that is, to differentiate

Maple procedures.

In this article we follow the development of the D operator

by way of examples discussing some of details and system design

issues as we go.

Functions – Expressions or Mappings?

Users will find two facilities for differentiation in Maple,

the diff procedure, and the D procedure.

The diff procedure takes as input what Maple calls

an expression or a formula which is a function of

zero of more variables (

x1, x2,…xn) which appear explicitly

in the expression.

It computes the partial derivative of the formula with respect to

a given variable.

The D procedure in Maple (often called the

D operator) takes as input a function which is

a mapping from

RN→R. In Maple this is called an

operator or a mapping.

For example, sin(x) is an expression in x but sin by itself is a

mapping from

R→R.

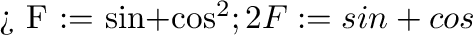

Another mapping in Maple is sin + cos2.

A mapping can always be applied to an argument.

For example, given the mapping

if we apply it to a number we get a number and if we apply it to a formula we get a formula, e.g.

Strictly speaking,

Maple would call both the formula

sin(x) + cos(x)2 and

the mapping

sin + cos2 expressions.

Any distinction between the two comes from how we use them.

Mappings are mappings because our intention is

to apply them to arguments, while formulae are the

result of applying mappings to their arguments.

Throughout this article we will call the former

mappings and the latter formulae.

Thus the diff procedure differentiates a formula and returns

a formula. The D operator differentiates a mapping

and returns a mapping. Compare

Note, for mappings, functional composition is represented

explicitly by use of the @ operator, and repeated composition

is represented by the @@ operator. Compare

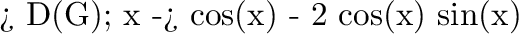

Derivatives of Unknown Functions

As well as being able to compute with known functions, like

sin, cos, exp, ln, etc., Maple has always supported the ability to

compute with unknown functions. In the following examples

of partial and repeated partial differentiation of an unknown function f, the

notation D[i](f) means the partial derivative of f with

respect to the ith argument.

;

D[2](f)

\p...

...1](f) = D[1](D[2](D[1](f)));

\par

D[1, 1, 2](f) = D[1, 1, 2](f)

\par

\end{maple}](img5.png)

In Maple, the D operator is an ordinary Maple procedure.

When we input D[1,2,1](f), what happens?

If D was an array or table then the entry D[1,2,1]

would be applied to f. In the case of a procedure, what happens

is that it is called with the given arguments and inside

the procedure the procname variable's value will be the subscript.

In our example, D is called with f as an argument

and the value of procname will be D[1,2,1].

The D code sorts the indices (assumes partial derivatives commute)

and returns D[1,1,2](f) unevaluated.

This subscripted function calling facility is new in Maple V.

It is also used for the log function for different bases.

For example, log[b](x) means logbx i.e. logarithm base b of x.

One of the main reasons why Maple has a D operator as well

as a diff procedure is because it is not possible to specify y'(0) using

diff. Maple users reading this article might think of

using the Maple subs procedure e.g. subs(x=0,diff(sin(x),x)).

This works if Maple can actually differentiate the function

but it will not work for an unknown function y.

Being able to represent y'(0) simply as D(y)(0) motivated the

introduction of operators, in particular the D operator in Maple.

This notation is used to specify the initial conditions for the

dsolve procedure, which solves systems of ODE's,

replacing an earlier defunct notation

yp(0), ypp(0),…

It is also used in series; for example, here is the

Taylor series for an unknown function f to order O(x6)

And here is a multivariate Taylor series to third order.

Without use of the D operator it would be difficult to apply

the chain rule to unknown functions. For example, we have

(x , x y)

\par

> D(f@g);

\par

D(f)@g D(g)

\par

\end{maple}](img8.png)

There are distinct advantages to manipulating mappings as if they were expressions. The following example illustrates implicit differentiation of y as a function of x. Given the equation

we can regard x and y as arbitrary mappings. Then applying

D to both sides of the equation we have

This equation can be interpreted in many different ways. For example, it can be solved to obtain a formula for D(y)

while the interpretation that x is an independent variable can be indicated by the substitution

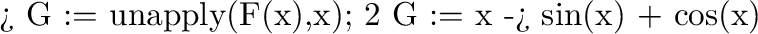

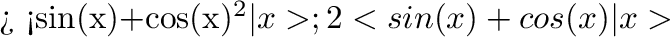

Earlier we mentioned that application of a mapping to a symbolic

variable yields a formula. And hence the identity

D(f)(x) = diff(f(x),x). For example

Given the formula, how can we get back the mapping F?

This is called lambda abstraction in the language of lambda calculus.

In Maple it is called unapply because it

is the inverse of application, that is, it takes a formula and returns

a mapping. For example

is a mapping in the form of a Maple procedure equivalent to

proc(x) sin(x)+cos(x)^2 end except it has been

displayed using a more succinct format,

known as arrow operators. The arrow notation above is used often in algebra.

This new notation is essentially equivalent to Maple's

older angle bracket notation

differing only in how the procedure is entered and displayed.

Another notation for functions that is used often in applied mathematics

is the

F(x) = sin(x) + cos(x)2 notation. In Maple one might use

F(x):=sin(x)+cos(x)^2, but this already has a meaning in Maple (which

unfortunately is different) and this does lead to confusion.

The meaning of F(x):=y is to enter the entry (x, y) in F's remember

table so that when F is called with the literal symbol

x, y is returned. It is rather like making F work like a table

of values.

Equivalence of Mappings

Notice though, that unapply did not return the mapping

in the same form F that we started with.

This raises another question, namely, given two mappings, how could one

test if they are the same? Users are familiar with the problem of testing

whether two formulae are the same. For example, suppose we are

given the two formulae

How would we test whether f1 = f2? This is the problem of simplification, or zero recognition. In Maple, one would use the expand (or simplify) function as follows

In this case, expand applies the transformation cos(2x) = 2cos(x)2 - 1 hence recognizing that f1 = f2. But what about mappings?

Expand tries to write an arrow (or angle bracket) operator as an algebraic combination of other mappings, in this case allowing us to recognize that F and G are effectively the same mapping.

Maple can differentiate those arrow operators too, even in their unexpanded form.

Notational Equivalence and Conversions

Two important identities relating D and

diff are D(f)(x) = diff(f(x),x) (and its

multiviate counterpart), and D(f) = unapply(diff(f(x),x)).

When two notations are involved for essentially the same

expression, it is essential to be able to convert from

one notation to the other. For example

Algorithmic Differentiation

The use of arrow operators, or more generally, arbitrary procedures leads us to the interesting problem of program differentiation. Consider the function f defined by the following Maple procedure

What is its derivative? We could compute its value as a formula and differentiate the formula.

In the Maple V Share Library![[*]](footnote.png) there is a facility for differentiating

programs. The

there is a facility for differentiating

programs. The PD procedure takes as input a Maple procedure f which

is a function of n parameters, and a positive integer i, and it returns

a Maple procedure which computes the partial derivative of f with

respect to the ith parameter.

For example

Does the procedure g really compute f'? In this case we can prove that it does by executing the procedure on symbolic parameters, in effect converting the function represented by the procedure into a formula.

Clearly one couldn't do this if a procedure had a conditional statement involving the formal parameter x and one called the procedure with a symbolic parameter, but under what conditions could one differentiate a procedure involving more than just an expression? For example, can this be done if the procedure had loops or subroutine calls? It turns out that the answer to this question is, surprisingly, yes. And moreover, there exists a very simple algorithm for computing the derivative of a procedure or a program.

To construct the derivative procedure, for each assignment statement v : = f (v1,…, vn) that appears in the procedure, where the vi are local variables or formal parameters, precede it by vx : = g(v1,…, vn) where g(v1,…, vn) is obtained by differentiating f (v1,…, vn) formally. That is, vi may depend on x, hence its derivative will be vix. Replace the last statement (or any RETURN value) by its derivative. This very simple algorithm is called ``forward differentiation''. There is actually quite a lot of literature on this subject. Many different algorithms, and quite a number of implementations have been written to differentiate Fortran code. For a good reference see [3], which also contains a fairly complete bibliography on algorithmic differentiation.

Here is an example which illustrates the power of algorithmic differentiation. In this example, the use of a loop allows us to represent a very large formula in a very compact way. There is a theoretical gain here. In general, a function that can be represented by a formula can be represented by a program in an exponentially more compact way by using local variables and loops.

Another nice theoretical result is that the size of the resulting program which computes the derivative is linear in the size of the original program. In fact, in most cases, it is not much bigger. What can algorithmic differentiation be used for? In numerical computation, one often is given a function f : RN→R, where f is given by a program, rather than an analytic formula. The standard fast methods for computing the zeros of f or the extrema require the derivatives of f. An example is given in the article The Billiard Problem in this issue, by Walter Gander and Dominik Gruntz, where a Newton iteration is used to find the zeroes of a function.

Here is another example where we are given a function which evaluates a polynomial input as an array of coefficients using Horner's rule and we compute its derivative.

It may not be apparent from these examples, but the difficult part of algorithmic differentiation is program optimization. This is because differentiation produces redundant computation. For example, repeatedly differentiating formulae results in lots of repeated common subexpressions. And, when computing partial derivatives, a lot of zeroes may result. Thus two of the main focuses of algorithmic differentiation is to avoid as much of this redundancy as possible and do program optimization. We are presently working on extending Maple's capabilities for differentiating procedures to compute gradients and jacobians, and improving the optimization of the resulting procedures.